Abstract

In this work, we are dedicated to a new task, i.e., hand-object interaction image generation, which aims to conditionally generate the hand-object image under the given hand, object and their interaction status. This task is challenging and research-worthy in many potential application scenarios, such as AR/VR games and online shopping, etc.

To address this problem, we propose a novel HOGAN framework, which utilizes the expressive model-aware hand-object representation and leverages its inherent topology to build the unified surface space. In this space, we explicitly consider the complex self- and mutual occlusion during interaction. During final image synthesis, we consider different characteristics of hand and object and generate the target image in a split-and-combine manner.

For evaluation, we build a comprehensive protocol to access both the fidelity and structure preservation of the generated image. Extensive experiments on two large-scale datasets, i.e., HO3Dv3 and DexYCB, demonstrate the effectiveness and superiority of our framework both quantitatively and qualitatively.

Generation Results

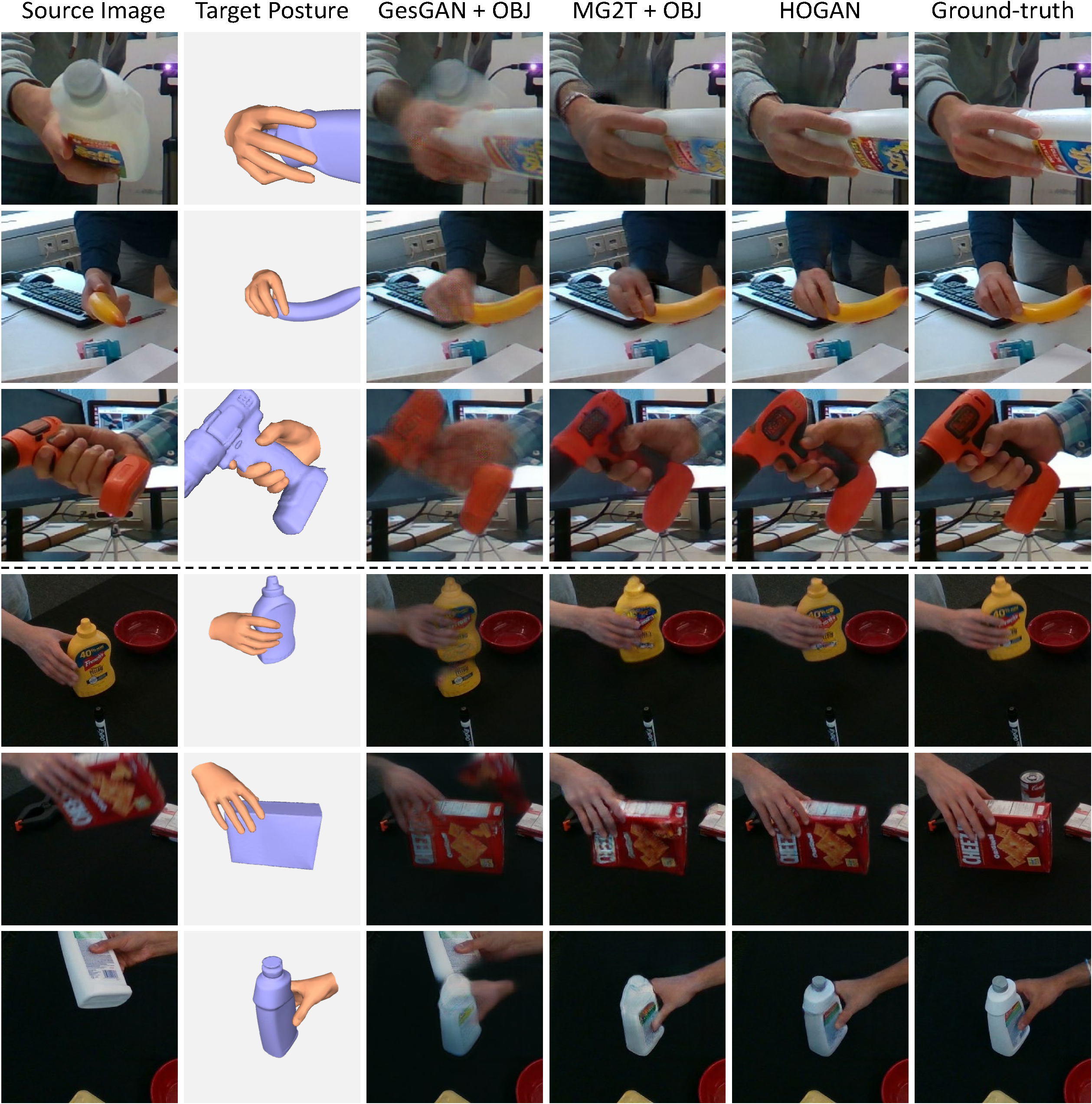

We perform qualitative comparisons with baselines, i.e., GestureGAN+OBJ and MG2T+OBJ, on HO3Dv3 and DexYCB dataset. Under the complex scenes, where the hand and object are highly interacting, our method can generate images with more reasonable spatial relationship, which significantly outperforms baseline methods.

Application

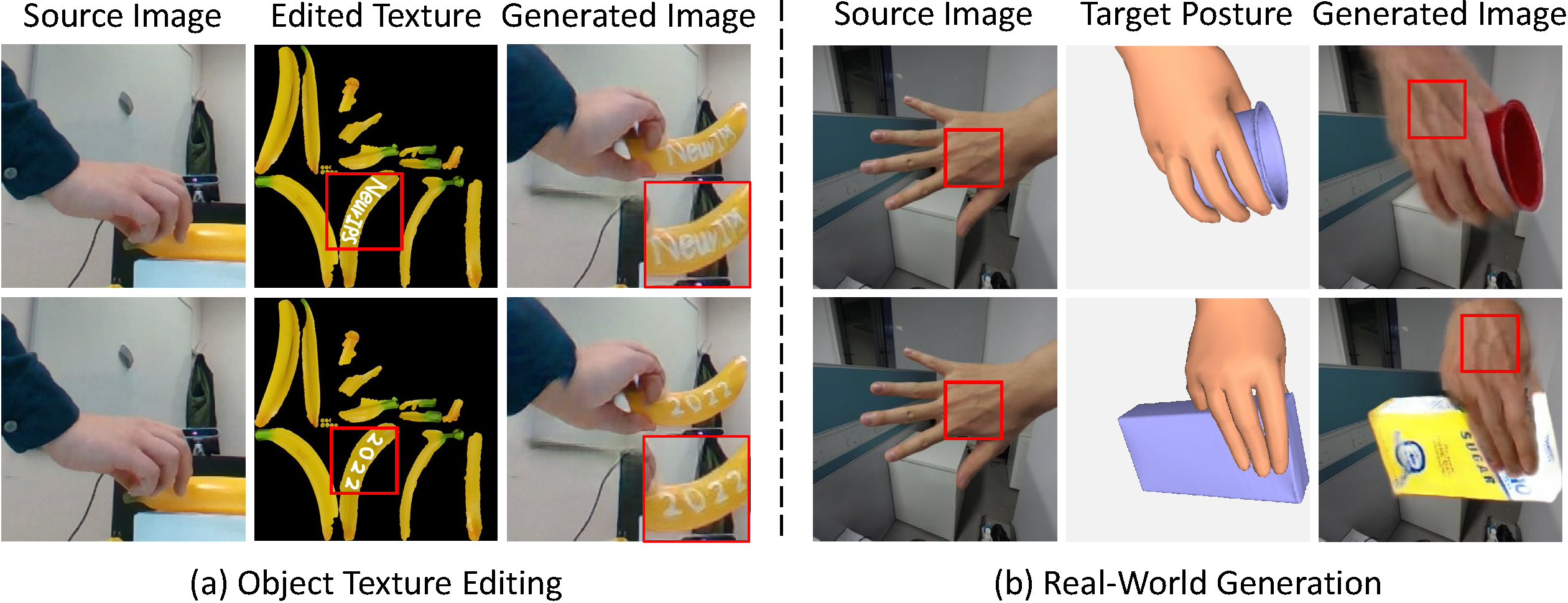

We explore several applications on our HOGAN, i.e., object texture editing and real-world generation.

In object texture editing, we alter the object texture with characters, i.e., "NeurIPS" and "2022", and generate the images conditioned on the edited textures. From the Figure (a), it is observed that our generated images well preserve the edited characters on the object texture.

Furthermore, we take the hand image from the real scene as the source image to test our pre-trained HOGAN framework. As shown in Figure (b), the generated images both maintain the source image appearance and meet the target posture condition.

BibTeX

@inproceedings{hu2022hand,

author = {Hu, Hezhen and Wang, Weilun and Zhou, Wengang and Li, Houqiang},

title = {Hand-Object Interaction Image Generation},

booktitle = {NeurIPS},

year = {2022},

}